Table of Contents

Documentation and metadata

I have never documented my data before. I have both qualitative and quantitative data and I work on a collaborative project. Where do I start?

1. Do not panic. Much documentation is simply good research practice, so you are probably already doing much of it.

2. Start early! Careful planning of your documentation at the beginning of your project helps you save time and effort. Do not leave the documentation for the very end of your project. Remember to include procedures for documentation in your data management planning.

3. Think about the information that is needed in order to understand the data. What will other researchers and re-users need in order to understand your data?

4. Create a separate documentation file for the data that includes the basic information about the data. You can also create similar files for each data set. Remember to organise your files so that there is a connection between the documentation file and the data sets.

5. Plan where to deposit the data after the completion of the project. The repository probably follows a specific metadata standard that you can adopt.

6. Document consistently throughout the project. Data documentation gives contextual information about your dataset(s). It specifies the aims and objectives of the original project and harbours explanatory material including the data source, data collection methodology and process, dataset structure and technical information. Rich and structured information helps you to identify a dataset and make choices about its content and usability.

TIP Use English for documentation. It increases the chance your data are understood and reused.

What is metadata and how do I document my data?

Start getting familiar with metadata by watching this video: Alexander Jedinger discusses metadata and data documentation. (This video is also available on Zenodo)

Please this video cite as: Jedinger, Alexander. (2020). What Is Metadata and How Do I Document My Data?. Presented at the CESSDA Training Days 2019 (CTD2019), Cologne, Germany: Zenodo. http://doi.org/10.5281/zenodo.3923956

Systematically documented research data is the key to making the data publishable, discoverable, citable and reusable. Clear and detailed documentation improve the overall data quality. It is vital to document both the study for which the data has been collected and the data itself. These two levels of documentation are called project-level and data-level documentation.

Project-level documentation

The project-level documentation explains the aims of the study, what the research questions/hypotheses are, what methodologies were being used, what instruments and measures were being used, etc. In the accordion the questions that your project-level documentation should answer are stated in more detail:

Describe the project history, its aims, objectives, concepts and hypotheses, including:

- The title of the project;

- Subtitle;

- Author(s)/creator(s) of the dataset;

- Other co-workers and their roles (person, research group or organisation that participated in the study and their roles);

- The institution of the author(s)/creator(s);

- Funders;

- Grant numbers;

- References to related projects;

- Publications from the data.

Describe what is in a dataset:

- Kind of data (interviews, images, questionnaires, etc.);

- File size (in bytes), file format of the data files and relationships between files;

- Description of data file(s): version and edition, structure of the database, associations, links between files, external links, formats, compatibility.

Describe how the data was acquired:

- The methodology and technique used in collecting and creating the data;

- Description of all the sources the data originate from (What is the subject of study? E.g. periodicals, datasets created by others?) together with an explanation of how and why it got to the present place (provenance);

- The methods/modes of data collection (for example):

- The instruments, hardware and software used to collect the data;

- Digitisation or transcription methods;

- Data collection protocols;

- Sampling design and procedure;

- Target population, units of observation.

Describe the:

- Data collector(s);

- Date of data collection;

- Geographical coverage of the data (e.g. nation).

Describe your workflow and specific tools, instruments, procedures, hardware/software or protocols you might have used to process the data, like:

- Data editing, data cleaning;

- Coding and classification of data.

Describe if and how the data were manipulated or modified:

- Modifications made to data over time since their original creation and identification of different versions of datasets;

- Other possible changes made to the data;

- Anonymisation;

- For time series or longitudinal surveys: changes made to methodology, variable content, question text, variable labelling, measurements or sampling.

Describe how the quality of the data has been assured:

- Checking for equipment and transcription errors;

- Quality control of materials;

- Data integrity checks;

- Calibration procedures;

- Data capture resolution and repetitions;

- Other procedures related to data quality such as weighting, calibration, reasons for missing values, checks and corrections of transcripts, transformations.

Describe the use and access conditions of the data:

- Where the data can be found (which data repository);

- Permanent identifiers;

- Access conditions such as embargo;

- Parts of the data that are restricted or protected;

- Licences;

- Data confidentiality;

- Copyright and ownership issues;

- Citation information.

Data-level documentation

Data-level or object-level documentation provides information at the level of individual objects such as pictures or interview transcripts or variables in a database. You can embed data-level information in data files. For example, in interviews, it is best to write down the contextual and descriptive information about each interview at the beginning of each file, and for quantitative data, variable and value names can be embedded within the data file itself.

Variable-level annotation should be embedded within a data file itself. If you need to compile an extensive variable level documentation, you can create it by using a structured metadata format.

Data-level documentation for quantitative data

For quantitative data document the following information is needed:

- Information about the data file

Data type, file type, and format, size, data processing scripts. - Information about the variables in the file

The names, labels and descriptions of variables, their values, a description of derived variables or, if applicable, frequencies, basic contingencies etc. The exact original wording of the question should also be available. Variable labels should:- Be brief with a maximum of 80 characters;

- Indicate the unit of measurement, where applicable;

- Reference the question number of a survey or questionnaire, where applicable.

Variable: 'Q11eximp'

Variable label: 'Q11: How important is exercise for you?

Value labels: 1: Very unimportant. 2. Unimportant. 3. Neutral. 4. Important. 5. Very important.

The label gives the unit of measurement and a reference to the question number (Q11).

- Information about the cases in the file

A specification of each case (units of research like e.g. a respondent) if applicable. - Names, labels and descriptions for variables, records and their values

- Description of the missing values at each variable

- Description of the weighting variable

- Explanation or definition of codes and classification schemes used

Storing documentation

Whenever possible, embed data documentation within a file. Click on the accordion for an example.

In this example from the UK Data Service (n.d.c), you see two SPSS tabs: Data view and Variable view, the tab which is visible right now.

Background and contextual information and participant details of interviews, observations or diaries can be described at the beginning of a file as a header or summary page.

Data-level documentation for qualitative data

For qualitative data document the following information is needed:

- Textual data file (for example, interview)

- Key information of participants such as age, gender, occupation, location, relevant contextual information);

- For qualitative data collections (for example image or interview collections) you may wish to provide a data list that provides information that enables the identifying and locating of relevant items within a data collection:

- The list contains key biographical characteristics and thematic features of participants such as age, gender, occupation or location, and identifying details of the data items;

- For image collections, the list holds key features for each item;

- The list is created from an initial list of interviews, field notes or other materials provided by the data depositor.

For textual data, background data are systematically entered at the beginning of each data unit (e.g. interview transcript) in a standardised manner.

The following example from the Finnish Social Science Data Archive presents a typical transcript of an interview with only one interviewee. The transcript of each interview in the data has been saved in a separate file, often in .rtf or .doc(x). Background data fields are entered in the following manner at the beginning of each transcription file.

Beginning of the transcript file

Interview date: 08.02.2013 [=8 February 2013]

Interviewer: Matt Miller

Pseudonym of interviewee: Ian (not the real first name of the interviewee)

Occupation of interviewee: Journalist

Age of interviewee: 32

Gender of interviewee: Male

- Audiovisual data files

For some types of data (image, audio or video files) the file format does not always allow recording background information in the beginning of the data file. In such cases, the best practice is to store background information in a manually created data list or a separate text file: a data list which accompanies the data collection.- Provide the following information on each image: creator, date, location, subject, content, copyright, keywords, equipment used;

- Some image files have embedded technical metadata (You may use tools to extract technical metadata from images, such as ExtractMetadata.com (n.d.)).

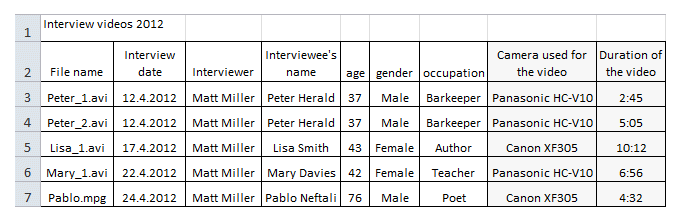

In this case - shown on the site of the Finnish Social Science Data Archive (2016) - the background data fields are manually entered in table form using Excel (or Open Office Calc program). The data collected were video-recorded interviews. The data list contains background information related to the interviewee and the interview event as well as information on the model and brand of the camera used and the length of the video (in minutes).

See also another data list example from the UK Data Service (n.d.c).

- Periodicals, magazines, journal articles

Among materials you use for qualitative data analysis, there may be online periodicals, magazines or journal articles. The information about all such resources must be kept in separate files:- Material collected from online periodicals: save references to web resources, like URLs, and do not forget they may change over time. To be sure information is not lost, articles should be copied into a word processing program;

- Materials from periodicals: When articles, photographs and other material are collected from periodicals for research purposes, bibliographic information should be carefully detailed (author(s), title, date of publication etc.);

- When you analyse articles, make a list of them, sort them alphabetically or chronologically in the order they were analysed in the course of research.

Storing documentation

- Write the documentation into a separate, well-structured file, and associate that with the data file. You may use the same filename stem in order to strengthen the file-metadata association. For example: 20130311_interviews_audio, 20130311_interviews_trans, 20130311_interviews_image, 20130311_interviews_metadata. The latter part of the name can be used to convey the specifics of the file. In this case "audio" means audio tape and "trans" a transcription of the audio tape;

- Data-level documentation can be embedded within a data file. For example, in interviews, it is best to write down the contextual and descriptive information about each interview at the beginning of each file;

- If you have a large amount of metadata or large amounts of data that will need metadata you can use a standard specific database for this purpose (such as the DDI Codebook (DDI Alliance, n.d.-a)).

Metadata: machine readable data documentation

Metadata or "data about data" are descriptors that facilitate cataloguing data and data discovery. Metadata are intended for machine-reading. When data is submitted to a trusted data repository, the archive generates machine-readable metadata. Machine-readable metadata help to explain the purpose, origin, time, location, creator(s), terms of use, and access conditions of research data.

In the tabs below we provide you with examples of:

- Metadata templates (for easy starting)

If you do not quite know yet what metadata you should generate (what fields are needed) have a look at the metadata templates provided. Some of them are very simple and can, therefore, help to create basic documentation. - Metadata standards (for when you need your metadata to be very structured).

Metadata standards may at first look seem quite scary. They are used by data archives for enhancing discoverability, interoperability and reusability. When you submit your dataset to a trusted data repository, these standards are automatically applied.

Metadata can, at its simplest, be stored in a single text file. However, you can also use a metadata template to help you structure your metadata or to see how your metadata appears in *.html.

Below we provide examples of metadata templates that you can use when compiling documentation. Or just for inspiration to take a look at typical fields which are often required. It is always possible to include additional documentation beyond what is suggested.

- Create a codebook about your research to accompany the dataset (DDI Alliance, n.d.a).

- Download the Dublin Core Metadata Template available from the University of Rochester (2013)

- Have a look at the Georgia Tech Library (n.d.) Metadata Template;

- Use the Dublin Core Metadata Generator (dublincoregenerator, n.d.);

- Have a look at the Cornell University (n.d.) guide to writing “readme” style metadata (with downloadable template);

- Create ISO 19115: Geographic information - Metadata using one of the metadata editors listed on GeoPlatform

You may want your metadata to be very structured. For that purpose, you can choose a metadata standard or a tool (software that has been developed to capture or store metadata) to help you add and organise your documentation. Many standards are discipline-specific. These will help you to add metadata to the workflow as they have been created to suit the needs of research data.

Remember that you do not generally need to generate machine-readable metadata by yourself. The repository where you may want to deposit your data will do that for you. When you are depositing your data the repository will require a data documentation document from you and will convert the documentation into machine-readable metadata.

The recommended standard for research in the social sciences is the DDI metadata standard.

DDI (Data Documentation Initiative) (DDI Alliance, n.d.b) is an international standard for describing the data produced by surveys and other observational methods in the social, behavioural, economic, and health sciences. Expressed in XML, the DDI metadata specification supports the entire research data lifecycle.

Common fields in the DDI include:

- Title

- Alternate Title

- Principal Investigator

- Funding

- Bibliographic Citation

- Series Information

- Summary

- Subject Terms

- Geographic Coverage

- Time Period

- Date of Collection

- Unit of Observation

- Universe

- Data Type

- Sampling

- Weights

- Mode of Collection

- Response Rates

- Extent of Processing

- Restrictions

- Version History

MIDAS Heritage (Historic England, 2012) is a British cultural heritage standard for recording information on buildings, archaeological sites, shipwrecks, parks and gardens, battlefields, areas of interest and artefacts.

VRA Core (2015) is a standard for the description of images and works of art and culture.

ISO 19115 is a schema for describing geographic information and services. It provides information about the identification, the extent, the quality, the spatial and temporal schema, the spatial reference, and the distribution of digital geographic data.

- Dublin Core (DCMI, n.d.);

- DataCite Metadata Schema (Datacite, n.d.);

- PREMIS (n.d.).

In its simplest form, the Dublin Core consists of 15 fields that basically describe all online resources:

- Title

- Creator

- Subject

- Description

- Publisher

- Contributor

- Date

- Type

- Format

- Identifier

- Source

- Language

- Relation

- Coverage

- Rights

For an example of how to apply the metadata standard DDI, have a look at a dataset in the Finnish Social Science Data Archive (Galanakis, Michail (University of Helsinki): Intercultural Urban Public Space in Toronto 2011-2013 [dataset]. Version 1.0 (2014-02-13). Finnish Social Science Data Archive [distributor]. http://urn.fi/urn:nbn:fi:fsd:T-FSD2926).

The machine-readable XML file looks like this.

| Study title Intercultural Urban Public Space in Toronto 2011-2013 Dataset ID Number FSD2926 Persistent identifier Data Type Qualitative Authors

Abstract The dataset contains transcripts of interviews conducted mainly in Toronto, Canada, during 2011 and 2012. A few interviews were conducted in Vancouver and Guelph as well. The main themes of the interviews were multiculturalism, interculturalism, diversity and public space, and how the participants' perceptions of interculturalism and public space. The interviewees were professionally or voluntarily involved in the physical or social planning process, in providing services for youth, or in dealing with managing diversity (in policy-making, planning, arts etc). They were community activists, professional designers, managers of public spaces, social services providers, or young persons who represented the users of services aimed at communities. The interviews were reflective, and questions asked changed according to what the interviewees talked about. The three main research questions were what the participants considered public spaces to be, how they defined interculturalism and, for expert interviews, how they planned/designed for diversity. Toronto is a very multicultural city, and one of the main aims of the study was to learn how Toronto's public space is managed and how public space could be used more creatively for the benefit of diverse groups. Other topics that came up included the exclusion of youth, crime, services, and facilities for youth, social and educational inequality, unemployment, public transport, street art, safety, police harassment, and privatization of public space. In addition to 25 one- and two-person interviews, there was one focus group interview of 13 young men and women. Interviewee age ranged from adolescents to senior citizens. Background variables included the interviewee's occupation, gender, and age. Keywords citizen participation; communities; cultural interaction; decision-making; ethnic groups; immigration; multiculturalism; politicians; public spaces; services for young people; social inequality; urban development; urban environment; urban sociology; urban spaces Topic Classification

Series Distributor Finnish Social Science Data Archive Access The dataset is (B) available for research, teaching, and study. Data Collector

Collection Dates 2011-05-19 – 2013-07-08 Nation Canada Geographical Coverage Canada, Ontario, British Columbia, Toronto, Guelph, and Vancouver Analysis/Observation Unit Type Individual Event/Process Universe Public space stakeholders in Toronto, Guelph, and Vancouver Time Method Cross-section Sampling Procedure Non-probability: Purposive Non-probability: Respondent-assisted The researcher selected the interviewees on the basis of their role related to public space. The interviewees themselves suggested other people to contact. Focus group participants were recruited by a voluntary worker working in the youth centre Spot in Toronto from among those willing to participate. Collection Mode Face-to-face interview Focus group Research Instrument Interview scheme and/or themes Data Processing The researcher had anonymized the names of the interviewees before submitting the transcripts to the FSD. The participants were given the option of choosing that some things they talked about would be left out of the transcript. These sections, marked with [off the record] notation, do not appear in the transcripts. During data processing, the data archive anonymised personal information related to third parties mentioned in the interviews, for instance, their address, person name, occupation or other information that might lead to the disclosure of identity. The anonymised information is marked with double brackets, for instance, [[name removed, a man]] replacing a person name. Data Files 26 interview transcripts stored as rtf files, of which 19 are individual interviews, six interviews of two persons and one focus group interview of 13 young participants. In addition, the archive has created a HTML folder of the rtf files. Data File Language Downloaded data package may contain different language versions of the same files. The data files of this dataset are available in English. FSD translates quantitative data into English on request, free of charge. Qualitative data are available in their original language only and are not translated. More information on ordering data translation. Data Version 1.0 Citation Requirement The data and its creators shall be cited in all publications and presentations for which the data have been used. The bibliographic citation may be in the form suggested by the archive or in the form required by the publication. Bibliographical Citation Galanakis, Michail (University of Helsinki): Intercultural Urban Public Space in Toronto 2011-2013 [dataset]. Version 1.0 (2014-02-13). Finnish Social Science Data Archive [distributor]. http://urn.fi/urn:nbn:fi:fsd:T-FSD2926 Deposit Requirement The user shall notify the archive of all publications where she or he has used the data. Special Terms and Conditions for Access Direct quotations from the data used in publications or presentations must not contain indirect identifiers (for example, detailed work history, unique life events) that might allow the identification of a third party mentioned in the interview. Disclaimer The original data creators and the archive bear no responsibility for any results or interpretations arising from the re-use of the data. Related Publications Galanakis, M. (2013). Intercultural Public Space in Multicultural Toronto. Canadian Journal or Urban Research, 22(1), pp. 67-89. Galanakis, M. (2015). Intercultural Public Space and Activism, in G. Marconi & E. Ostanel (eds) The Intercultural City: Migration, Minorities and the Management of Diversity. London: IB Tauris. Galanakis, Michail (2015). Public Spaces for Youth? The Case of the Jane-Finch Neighbourhood in Toronto. Space and Culture. Sage Online Publications, DOI:10.1177/1206331215595731 Leikkilä, J., Faehnle, M., & Galanakis, M. (2013). Urban Nature and Social Diversity Promoting Interculturalism in Helsinki by Planning Urban Nature, Urban Forestry & Urban Greening, 12(2), pp. 183-190. |

Metadata for new data types – new standards still under development

To provide metadata for social media data and transaction data with metadata, the metadata standards by the Data Documentation Initiative (DDI) should serve as the guiding framework. Importantly, however the DDI standard is "insufficient to document all the details required for reproducibility of a social media dataset" (Kinder-Kurlanda et al 2017: 3). For example, the DDI format does not allow describing biases caused by data mining interfaces of social media platforms, changes in data availability and formats, explanations about code and scripts used in collection, cleaning and analysis etc. Such information can be described only in an unstructured manner as an additional comment in the standard's form.

Together with other CESSDA partners, GESIS is currently developing recommendations for the provision of metadata for new data types (esp. social media data).